Lattice QCD Simulation in Research on Hadron Physics and the Standard Model of Elementary Particles

The University of Tsukuba’s Center for Computational Sciences (CCS), a national inter-university research facility, was established in 2004 to further the development of computational sciences. Today we spoke with Associate Professor Tomoteru Yoshie of the Division of Particle Physics and Astrophysics and Associate Professor Osamu Tatebe of the Division of High Performance Computing Systems about JLDG, the data grid created for particle physics research, and about SINET’s role therein.

(Interview date: July 1, 2008)

Could you tell us the purpose of the work done at the University of Tsukuba’s Center for Computational Sciences?

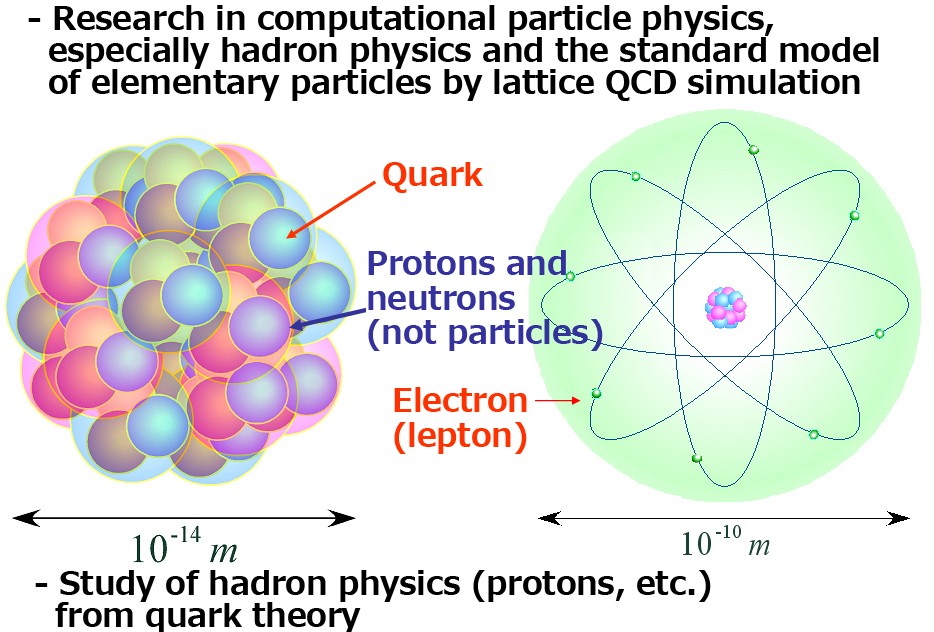

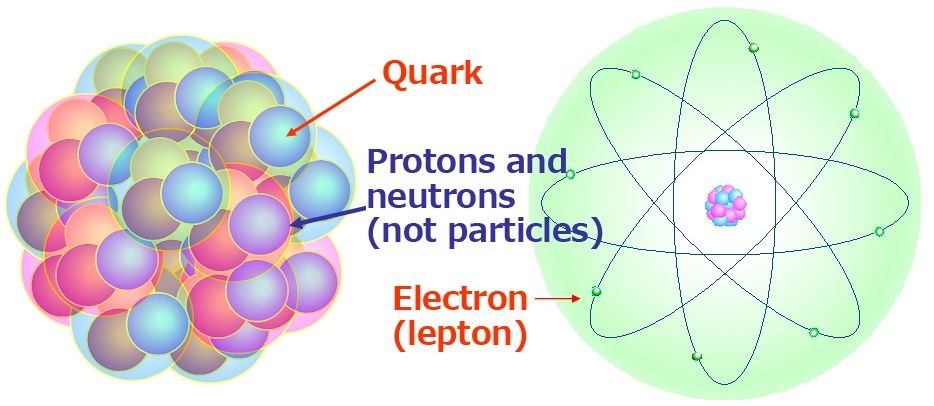

Yoshie: Firstly, we employ large-scale computer simulations and analyses to solve questions in a number of research fields. In my own specialty of computational particle physics, for example, we use lattice QCD simulation to study hadron physics and the standard model of elementary particles.

The standard model of elementary particles is a fairly well-established theory, but a major task of particle physics today is to verify whether it is really true and look for clues predictive of a theory that would go beyond the standard model. Since this kind of research is difficult to do analytically, it becomes essential to use simulation by numerical methods, in other words, to use computers.

As the name “Center for Computational Sciences” indicates, another important purpose of CCS is advanced research in computer sciences and informatics. For example, we developed the computer we are currently using, PACS-CS, together with researchers in the computer science field.

So you have researchers in science fields and computer science fields working closely together?

Tatebe: That’s right. At CCS, we computer scientists come together with researchers in three target areas: particle physics and astrophysics; material and life sciences; and the global environment and biological sciences. This kind of center has few parallels in the whole of Japan, I’d say.

We have two divisions in the computer sciences: Computational Informatics and High Performance Computing Systems. I belong to the latter, which does architecture design for high-performance computers and develops the necessary systems software and technology. The demands on computers for cutting-edge research are very different from those on, say, servers for general business systems. For example, in the PACS-CS that Professor Yoshie has mentioned, we devised various ways to achieve ultra-high speed in parallel processing, such as an arrangement for high-speed data transfer to the nodes.

I believe you also have a project under way that utilizes networks for particle physics research.

What does that involve?

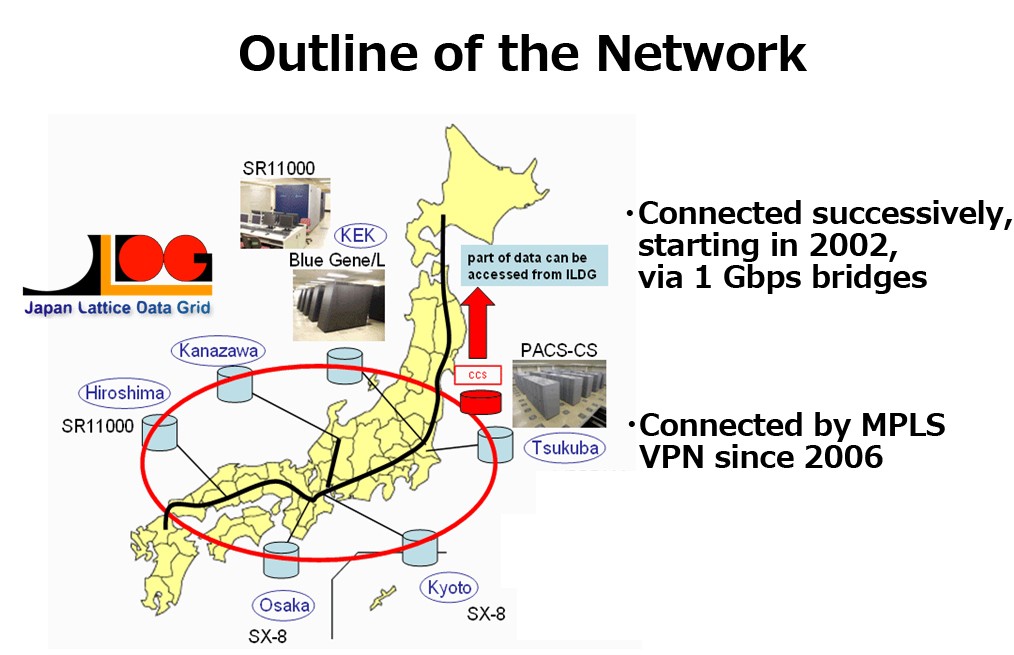

Yoshie: Here it gets a little technical. In our research, the basic data consist of what’s known as “QCD configurations.” Once these data have been generated, we can use them to study various properties of particles. The problem is that enormous computer resources are required to generate QCD configuration data. Even if we use a supercomputer, one machine has trouble keeping up. And so, in 2002, we initiated a project to generate basic data with the supercomputers of multiple research institutes and share them via a network.

For this project, known as “HEPnet-J/sc,” we used SINET’s GbE dedicated lines to construct a wide-area distributed file system between the University of Tsukuba, KEK (the High Energy Accelerator Research Organization), and Kyoto, Osaka, Hiroshima, and Kanazawa Universities. Specifically, the system now in operation connects the sites’ supercomputers via file servers, which act at the same time as a firewall, and mirrors the data between the file servers.

I see. That way, the institutes can access one another’s data efficiently.

Yoshie: This approach has had its challenges, though. For instance, in our research we consider a certain quantity of data as a single unit, but this becomes distributed over multiple disks. Other issues have included the users’ not being able to keep track fully of the data locations or the server the data were mirrored to, and the problem of support without a “user group” concept.

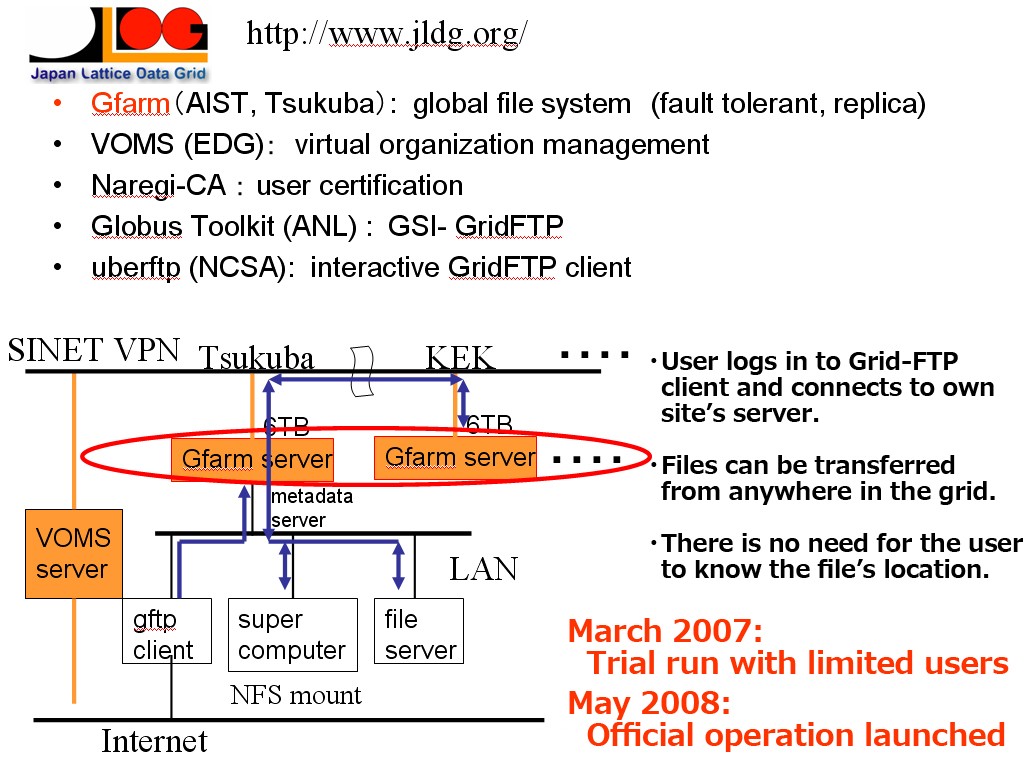

To eliminate these problems, in 2005 we began developing a new system, the Japan Lattice Data Grid (JLDG). In developing this grid, we wanted to achieve two things: a flat data-sharing system with no space limitation and user management across organizations.

JLDG’s specific components include such things as the global file system Gfarm, in whose development Professor Tatebe collaborated; the virtual organization management tool VOMS; the user certification system Naregi-CA; and the Globus Toolkit for building grid systems. For the network, instead of the GbE bridges we’d used previously, we now use SINET3’s L3VPN service (MPLS/VPN).

With JLDG, is the location of data no longer relevant?

Tatebe: That’s right. Users have only to log in to their own organization’s server to freely access the data they require. They don’t even need to know which server those data are actually stored on.

To achieve this structure, however, several design features are necessary. For example, since it takes time to retrieve data from a distant server, in the background we replicate files on the servers at all sites. Since this means frequent copying of data, network speed is crucial. A high-speed network is really a must for a large-scale data-sharing system like JLDG.

And SINET supports these features, in other words. What is the current status of JLDG?

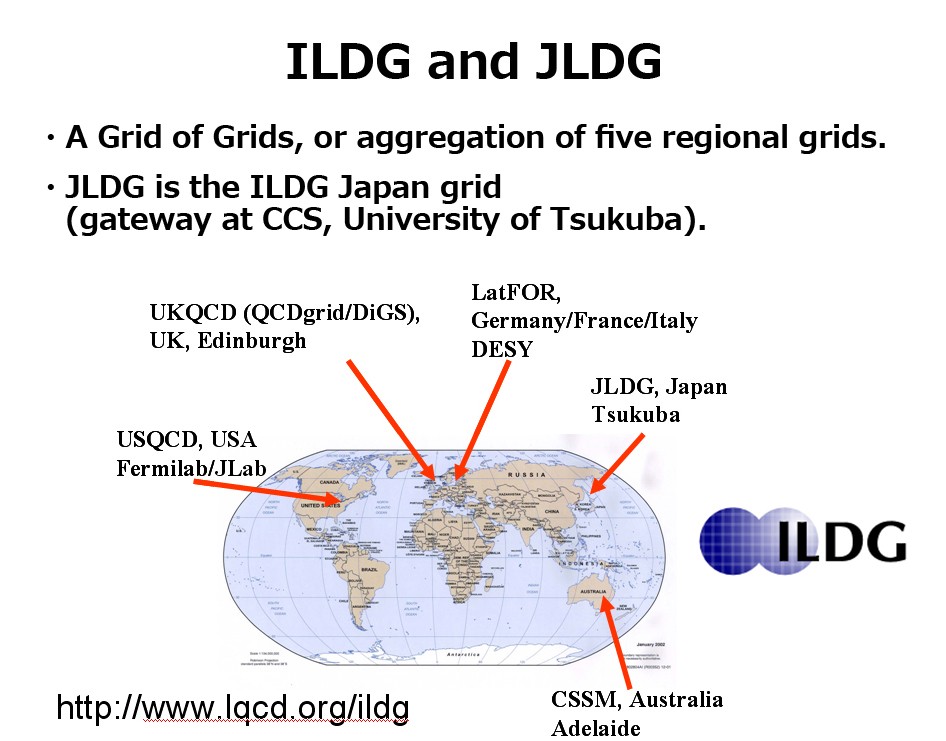

Yoshie: After running it in trial mode from March 2007, we launched it officially in May 2008, and user registration is going well. Further, similar data grids are also being built overseas, and the International Lattice Data Grid (ILDG) functions as a “grid of grids” linking all of these.

As a member of the ILDG, together with grids in the UK, Europe, the U.S., and Australia, JLDG provides QCD configurations via SINET to researchers both in Japan and overseas. Our data transfer records show that at present about 1,000 data files are accessed every month.

I hope JLDG will help yield new discoveries.

Lastly, could you tell us about your plans for the future?

Yoshie: At present, JLDG is being used to allow researchers in computational particle physics to access public data, but in the near future we want to make it usable on a routine basis as research infrastructure. And since even the basic tasks of large-scale data replication and transferring data from remote sites on the grid require a high-speed network, we are counting on SINET’s services.

Tatebe: What it comes down to is constructing high-speed computers. That involves many elements: computer architecture, file systems, communications software, and different types of libraries, to name but a few. We intend to pursue our research and development work in these areas until we realize a seamless and efficient system.